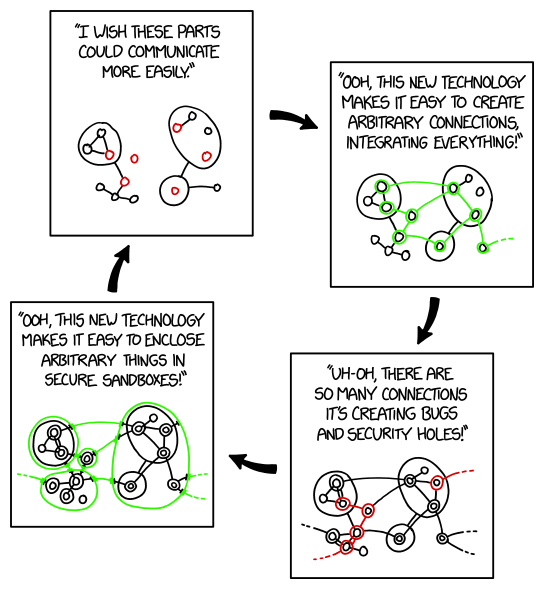

There are another important reason than most of the issues pointer out here that docker solves.

Security.

By using containerization Docker effectively creates another important barrier which is incredibly hard to escape, which is the OS (container)

If one server is running multiple Docker containers, a vulnerability in one system does not expose the others. This is a huge security improvement. Now the attacker needs to breach both the application and then break out of a container in order to directly access other parts of the host.

Also if the Docker images are big then the dev needs to select another image. You can easily have around 100MB containers now. With the “distroless” containers it is maybe down to like 30 MB if I recall correctly. Far from 1GB.

Reproducability is also huge efficiency booster. “Here run these this command and it will work perfecty on your machine” And it actually does.

It also reliably allows the opportunity to have self-healing servers, which means businesses can actually not have people available 24/7.

The use of containerization is maybe one of the greatest marvels in software dev in recent (10+) years.

Yes, yes you really should

I said this a year and a half ago and I still haven’t, awful decision, I now own servers too so I should really learn them

i said same thing and in 2 days deployed 4 conatainers that fixed a problems in my life so thats goood

What did you deploy?

I’m messing with self-hosting a LMM with a web front end right now.

actully i started with it 2 days ago so i have on my device something called adguardhome its for blocking ads and a dns and i have metube which is webui for ytdlp and memos and photo prism im still messing with them i started knowing how to see the proccess and stop and run and see logs so i gained some knowledge

Oh I’m totally getting metube. I use ytdlp with a script

btw it doesn’t have advanced configs so give it a try also their are some others you can try i have metube cuz my dad wants somthing easy for him just to put youtube link and download this is why i used it for him

Oof. I’m anxious that folks are going to get the wrong idea here.

While OCI does provide security benefits, it is not a part of a healthly security architecture.

If you see containers advertised on a security architecture diagram, be alarmed.

If a malicious user gets terminal access inside a container, it is nice that there’s a decent chance that they won’t get further.

But OCI was not designed to prevent malicious actors from escaping containers.

It is not safe to assume that a malicious actor inside a container will be unable to break out.

Don’t get me wrong, your point stands: Security loves it when we use containers.

I just wish folks would stop treating containers as “load bearing” in their security plans.

Isn’t Docker massively insecure when compared to the likes of Podman, since Docker has to run as a root daemon?

I prefer Podman. But Docker can run rootless. It does run under root by default, though.

I don’t have in-depth knowledge of the differences and how big that is. So take the following with a grain of salt.

My main point is that using containerization is a huge security improvement. Podman seems to be even more secure. Calling Docker massively insecure makes it seem like something we should avoid, which takes focus away from the enormous security benefit containerization gives. I believe Docker is fine, but I do use Podman myself, but that is only because Podman desktop is free, and Docker files seem to run fine with Podman.

Edit: After reading a bit I am more convinced that the Podman way of handling it is superior, and that the improvement is big enough to recommend it over Docker in most cases.

Not only that but containers in general run on the host system’s kernel, the actual isolation of the containers is pretty minimal compared to virtual machines for example.

What exactly do you think the vm is running on if not the system kernel with potentially more layers.

Virtual machines do not use host kernel, they run full OS with kernel, cock and balls on virtualized hardware on top of the host OS.

Containers are using the host kernel and hardware without any layer of virtualization

You don’t have to ship a second OS just to containerize your app.

Call me crusty, old-fart, unwilling to embrace change… but docker has always felt like a cop-out to me as a dev. Figure out what breaks and fix it so your app is more robust, stop being lazy.

I pretty much refuse to install any app which only ships as a docker install.

No need to reply to this, you don’t have to agree and I know the battle has been already lost. I don’t care. Hmmph.

Why put in a little effort when we can just waste a gigabyte of your hard drive instead?

I have similar feelings about how every website is now a JavaScript application.

Yeah, my time is way more valuable than a gigabyte of drive space. In what world is anyone’s not today?

It’s a gigabyte of every customer’s drive space.

The value add is even better from a customers perspective.

That I can install far less software on legacy devices because everything new is ridiculously bloated?

Don’t you get it? We’ve saved time and added some reliability to the software! It. Sure it takes 3-5x the resources it needs and costs everyone else money - WE saved time and can say it’s reliable. /S

3-5x the resources my ass.

Mine, on my 128gb dual boot laptop.

I’ve got you beat. 32gb emmc laptop.

I need every last mb on this thing. It’s kind of nice because I literally cannot have bloat, so I clear out folders before I forget where things went. I only really use it for the internets and to ssh into my servers, but it’s also where I usually make my bootable USB drives, so I’ll need 2-5 gb free for whichever ISO I want to try out. I really detest the idea of downloading to one USB, then dd-ing that to another. I should probably start using ventoy or something, but I guess I’m old school stubborn.

I tried using flatpak and docker, but it’s just not gonna happen.

:-)

Going back in time is cheating a bit, but around 2013 my computer was an 8gb netbook. I carefully segregated my files into a couple of GB that I’d keep available, and the rest on an external HDD. To this day I keep that large/small scheme, though both parts have grown since then.

How many docker containers would you deploy on a laptop? Also 128gb is tiny even for an SSD these days .

None, in fact, because I still haven’t got in to using docker! But that is one of the factors that pushes it down the list of things to learn.

I’ve had a number of low-storage laptops, mostly on account of low budget. Ever since taking an 8GB netbook for work (and personal) in the mountains, I’ve developed space-saving strategies and habits!

I love docker… I use it at work and I use it at home.

But I don’t see much reason to use it on a laptop? It’s more of a server thing. I have no docker/podman containers running on my PCs, but I have like 40 of em on my home NAS.

Yeah, I wonder if these people are just being grumpy grognards about something they don’t at all understand? Personal computers are not the use case here.

A gigabyte of drive space is something like 10-20 cents on a good SSD.

Docker is more than a cop out for than one use case. It’s a way for quickly deploy an app irrespective of an environment, so you can scale and rebuild quickly. It fixes a problem that used to be solved by VMs, so in that way it’s more efficient.

Well, nope. For example, FreeBSD doesn’t support Docker – I can’t run dockerized software “irrespective of environment”. It has to be run on one of supported platforms, which I don’t use unfortunately.

A lack of niche OS compatibility isn’t much of a downside. Working on 99.9% of all active OS’s is excellent coverage for a skftware suite.

Besides, freebsd has podman support, which is something like 95% cross compatible with docker. You basically do have docker support on freebsd, just harder.

How is POSIX a niche? 🤨

Just POSIX and no other compatibility? Pretty niche, man.

To deploy a docker container to a Windows host you first need to install a Linux virtual machine (via WSL which is using Hyper-V under the hood).

It’s basically the same process for FreeBSD (minus the optimizations), right?

Containers still need to match the host OS/architecture they are just sandboxed and layer in their own dependencies separate from the host.

But yeah you can’t run them directly. Same for Windows except I guess there are actual windows docker containers that don’t require WSL but if people actually use those it’d be news to me.

There’s also this cursed thing called Windows containers

Now let me go wash my hands, keyboard and my screen after typing that

Well that’s where Java comes in /slaps knee

If this is your take your exposure has been pretty limited. While I agree some devs take it to the extreme, Docker is not a cop out. It (and similar containerization platforms) are invaluable tools.

Using devcontainers (Docker containers in the IDE, basically) I’m able to get my team developing in a consistent environment in mere minutes, without needing to bother IT.

Using Docker orchestration I’m able to do a lot in prod, such as automatic scaling, continuous deployment with automated testing, and in worst case near instantaneous reverts to a previously good state.

And that’s just how I use it as a dev.

As self hosting enthusiast I can deploy new OSS projects without stepping through a lengthy install guide listing various obscure requirements, and if I did want to skip the container (which I’ve only done a few things) I can simply read the Dockerfile to figure out what I need to do instead of hoping the install guide covers all the bases.

And if I need to migrate to a new host? A few DNS updates and SCP/rsync later and I’m done.

I’ve been really trying to push for more usage of dev containers at my org. I deal with so much hassle helping people install dependencies and deal with bizarre environment issues. And then doing it all over again every time there is turnover or someone gets a new laptop. We’re an Ops team though so it’s a real struggle to add the additional complexity of running and troubleshooting containers on top of mostly new dev concepts anyway.

Agreed there – it’s good for onboarding devs and ensuring consistent build environment.

Once an app is ‘stable’ within a docker env, great – but running it outside of a container will inevitably reveal lots of subtle issues that might be worth fixing (assumptions become evident when one’s app encounters a different toolchain version, stdlib, or other libraries/APIs…). In this age of rapid development and deployment, perhaps most shops don’t care about that since containers enable one to ignore such things for a long time, if not forever…

But like I said, I know my viewpoint is a losing battle. I just wish it wasn’t used so much as a shortcut to deployment where good documentation of dependencies, configuration and testing in varied environments would be my preference.

And yes, I run a bare-metal ‘pet’ server so I deal with configuration that might otherwise be glossed over by containerized apps. Guess I’m just crazy but I like dealing with app config at one layer (host OS) rather than spread around within multiple containers.

The container should always be updated to march production. In a non-container environment every developer has to do this independently but with containers it only has to be done once and then the developers pull the update which is a git style diff.

Best practice is to have the people who update the production servers be responsible for updating the containers, assuming they aren’t deploying the containers directly.

It’s essentinally no different than updating multiple servers, except one of those servers is then committed to a local container respository.

This also means there are snapshots of each update which can be useful in its own way.

It eliminates the dependency of specific distributions problem and, maybe more importantly, it solves the dependency of specific distribution versions problem (i.e. working fine now but might not work at all later in the very same distribution because some libraries are missing or default configuration is different).

For example, one of the games I have in my GOG library is over 10 years old and has a native Linux binary, which won’t work in a modern Debian-based distro by default because some of the libraries it requires aren’t installed (meanwhile, the Windows binary will work just fine with Wine). It would be kinda deluded to expect the devs would keep on updating the Linux native distro (or even the Windows one) for over a decade, whilst if it had been released as a Docker app, that would not be a problem.

So yeah, stuff like Docker does have a reasonable justification when it comes to isolating from some external dependencies which the application devs have no control over, especially when it comes to future-proofing your app: the Docker API itself needs to remain backwards compatible, but there is no requirement that the Linux distros are backwards compatible (something which would be much harder to guarantee).

Mind you, Docker and similar is a bit of a hack to solve a systemic (cultural even) problem in software development which is that devs don’t really do proper dependency management and just throw in everything and the kitchen sink in terms of external libraries (which then depend on external libraries which in turn depend on more external libraries) into the simplest of apps, but that’s a broader software development culture problem and most of present day developers only ever learned the “find some library that does what you need and add it to the list of dependencies of your build tool” way of programming.

I would love it if we solved what’s essentially the core Technical Architecture problem of in present day software development practices, but I have no idea how we can do so, hence the “hack” of things like Docker of pretty much including the whole runtime environment (funnilly enough, a variant of the old way of having your apps build statically with every dependency) to work around it.

You ever notice how most docker images are usually based from Ubuntu, the arguably worse distro to use for dependency management.

The other core issue is people using docker as a configuration solution with stuff like compose.

If I want containers, I usually just use LXC.

Only docker project I liked was docker-osx which made spinning up OSX VMs easy, but again it was basically 80% configuration for libvirt.

I hate that it puts package management in Devs hands. The same Devs that usually want root access to run their application and don’t know a vulnerability scan for the life of them. So now rather than having the one up to date version of a package on my system I may have 3 different old ones with differing vulnerabilities and devs that don’t want to change it because “I need this version because it works!”

I’d argue that’s just a ripple effect of being a bad dev, not necessarily the tools fault, but I do get where you are coming from. But also vulnerabilities in some package in a container would be isolated to that container without a further exploit chain

I love docker, it of course comes with some inefficiencies, but let’s be real, getting an app to run on every possible environment with any possible other app or configuration or… that could interfere with yours in some way is hell.

In an ideal world, something like docker is indeed not needed, but the past decades have proven beyond a doubt that alas, we don’t live in this utopia. So something like docker that just sets up a private environment for the app so that nothing else can interfere with it… why not? Anything i’ve got running on docker is just so stable. I never have to worry that any change i do might affect those apps. Updating them is automated, …

Not wasting my and the developers time in exchange for a bit of computer resources, sounds like a good deal. If we find a better way for apps to be able to run on any environment, that would of course be even better, but we haven’t, so docker it is :).

I agree that it’s a “cop-out”, but the issue it mitigates is not an individual one but a systemic one. We’ve made it very, very difficult for apps not to rely on environmental conditions that are effectively impossible to control without VMs or containerization. That’s bad, but it’s not fixable by asking all app developers to make their apps work in every platform and environment, because that’s a Herculean task even for a single program. (Just look at all the compatibility work in a codebase that really does work everywhere, such as vim.)

Docker or containers in general provide isolation too, not just declarative image generation, it’s all neatly packaged into one tool that isn’t that heavy on the system either, it’s not a cop out at all.

If I could choose, not for laziness, but for reproducibility and compatibility, I would only package software in 3 formats:

- Nix package

- Container image

- Flatpak

The rest of the native packaging formats are all good in their own way, but not as good. Some may have specific use cased that make them best like Appimage, soooo result…

Yeah, no universal packaging format yet

Now if only Docker could solve the “hey I’m caching a layer that I think didn’t change” (Narrator: it did) problem, that even setting the “don’t fucking cache” flag doesn’t always work. So many debug issues come up when devs don’t realize this and they’re like, “but I changed the file, and the change doesn’t work!”

docker system prune -aand beat that SSD into submission until it dies, alas.My favourite is when docker refuses to build unless u enable the no cache options. It claims no disk space (it has plenty, might be some issue with qubes and dynamic disk sizing). I set up a network cache to cache packages at the network level its saved me years in build time.